Geoffrey Morrison/CNET

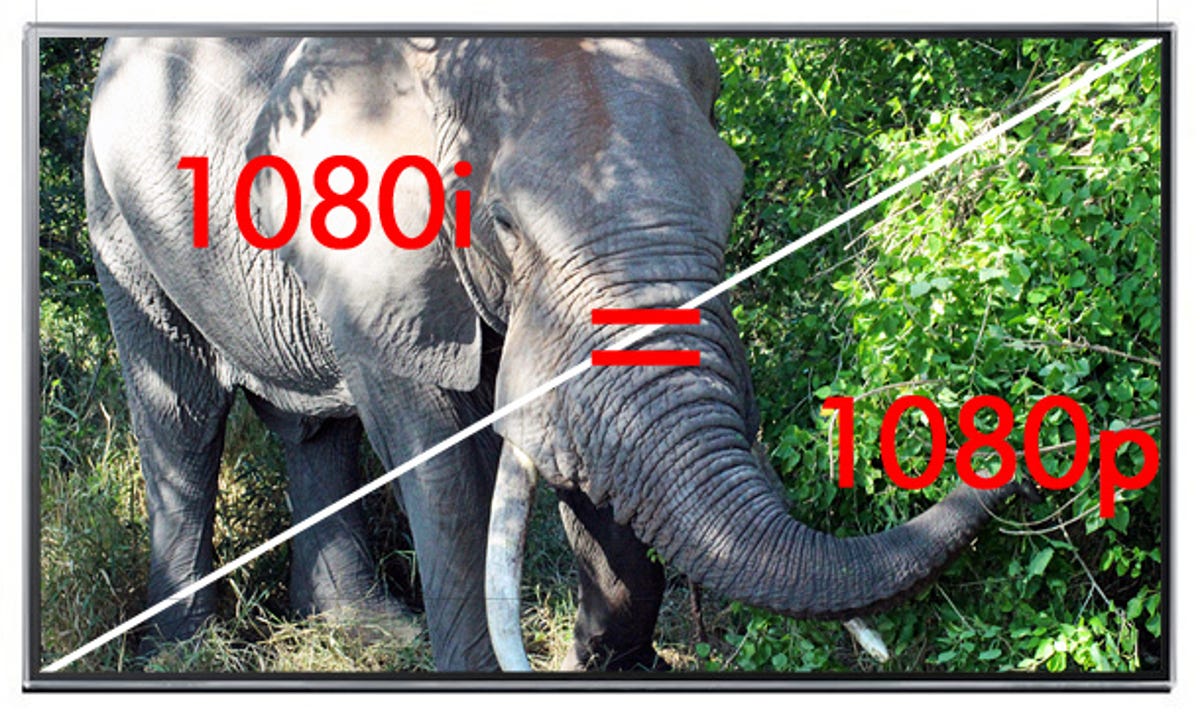

There still seems to be some confusion about the difference between 1080i and 1080p. Both are 1,920×1,080-pixel resolution. Both have 2,073,600 pixels. From one perspective, 1080i is actually greater than Blu-ray. And, you can’t even get a full 1080p/60 source other than a PC, camcorder, or some still cameras that shoot video.

True, 1080i and 1080p aren’t the same thing, but they are the same resolution. Let the argument commence…

1080i and 720p Because our TV world is based around 60Hz, and because there’s a limit to how much resolution could be transmitted over the air (because of bandwidth and MPEG compression), the two main HDTV resolutions are 1080i and 720p. Let’s start with 720p, as it’s the easier to understand.

OK, 720p is 1,280×720 pixels, running at 60 frames per second (fps). This is the format used by ABC, Fox, and their various sister channels (like ESPN). I’ve seen some reader comments in response to other articles I’ve written ridiculing ABC/Fox for this “lower” resolution, but that’s unfair in two big ways. The first, in the late ’90s when all this was happening, there were no 1080p TVs. Sure, we all knew they were coming, but it was years before they started shipping (now, almost all TVs are 1080p). The other big way was the sports. Both ABC and Fox have big sports divisions, which played a big role in their decision to go with 720p. This is because when it comes down to it, fast motion looks better at 60fps (more on this later).

The 1080i designation is 1,920×1,080 pixels, running at 30 frames per second. This is what CBS, NBC, and just about every other broadcaster uses. The math is actually pretty simple: 1080 at 30fps is the same amount of data as 720 at 60 (or at least, close enough for what we’re talking about).

How, you might ask, does this 30fps work on TVs designed for 60? With modern video processing, the frame rate doesn’t matter much. Back in the olden days of the ’90s, however, we weren’t so lucky. The 1080 image is actually “interlaced.” That’s where the “i” comes from. What this means is that even though there are 30 frames every second, it is actually 60 fields. Each field is 1,920×540 pixels, every 60th of a second. Of the 1,080 lines of pixels, the first field will have all the odd lines, the second field will have all the even lines. Your TV combines these together to form a complete frame of video.

What about 1080p? Yes, what about it? Your 1080p TV accepts many different resolutions, and converts them all to 1,920×1,080 pixels. For most sources, this is from a process known as upconversion. Check out my article, appropriately called “What is upconversion?” for more info on that process.

When your TV is sent a 1080i signal, however, a different process occurs: deinterlacing. This is when the TV combines the two fields into frames. If it’s done right, the TV repeats each full frame to create 60 “fps” from the original 30.

If it’s done wrong, the TV instead takes each field, and just doubles the information. So you’re actually getting 1,920x540p. Many early 1080p HDTVs did this, but pretty much no modern one does. In a TV review, this is the main thing we’re checking when we test deinterlacing prowess.

Related stories

- LED LCD vs. plasma vs. LCD

- Active 3D vs. passive 3D: What’s better?

- Why 4K TVs are stupid

- Contrast ratio (or how every TV manufacturer lies to you)

- OLED: What we know

- Why all HDMI cables are the same

- Best 4k TV’s

If only it were that easy (if that is even easy) However, there’s a problem. Let’s take the example of the sports from earlier. ABC and Fox very consciously made the choice to go with 720p over 1080i. As we said earlier, this largely wasn’t based on some limitation of the technology or being cheap. It’s that 1080i is worse with fast motion than 720p.

At 60 frames per second (720p), the camera is getting a full snapshot of what it sees every 60th of a second. With 1080i, on the other hand, it’s getting half a snapshot every 60th of a second (1,920×540 every 60th). With most things, this isn’t a big deal. Your TV combines the two fields. You see frames. Everything is happy in TV land.

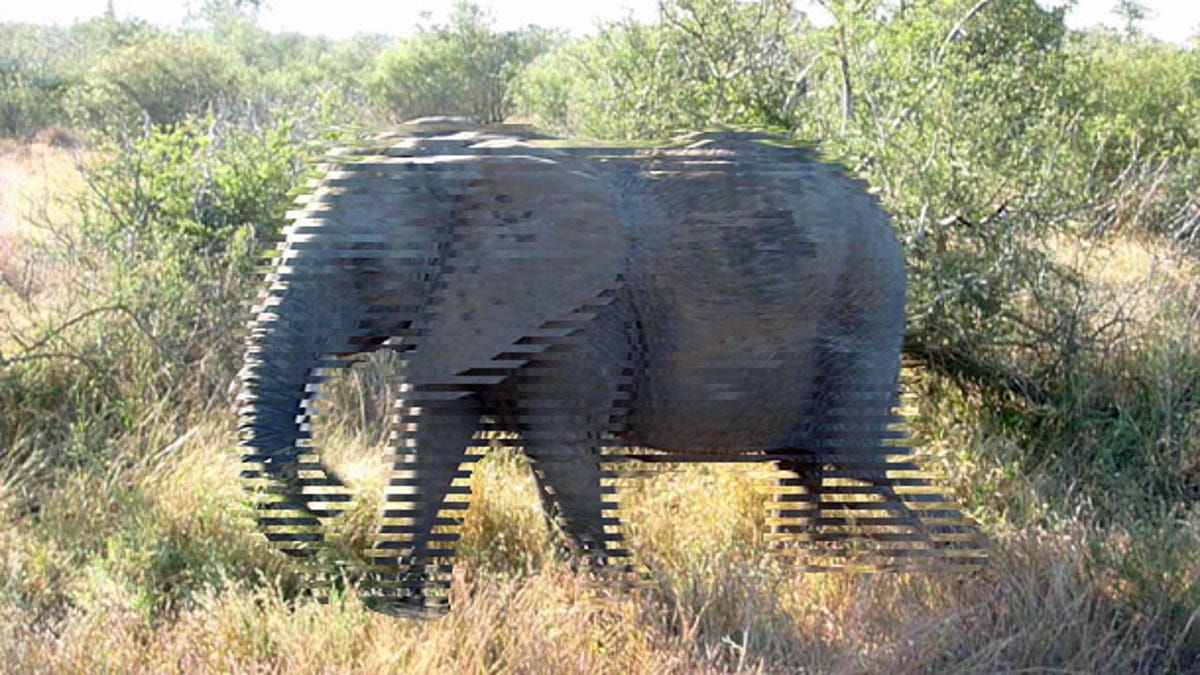

But let’s say there’s a sportsball guy running across your screen from right to left. The camera captures a field of him, then a 60th of a second later, it captures another field of him. Uh-oh, he wasn’t nice enough to stand still while this happened. So now field “A” has him in one place (represented by half the image’s pixels) and field “B” has him slightly to the left (represented by half the image’s pixels). If the TV were to combine these two fields as-is, the result would look like someone dragged a comb across him. Conveniently, this artifact is called combing.

Using sports images gets their respective organizations’ feathers all ruffled. So instead, here are two frames from a video I took. I named him Fred.

Geoffrey Morrison/CNET

Poor Fred; look what bad deinterlacing did to him.

Geoffrey Morrison/CNET

Obviously Fred wasn’t moving fast enough to get combing artifacts this bad, but this should give you an idea what’s going on. The TV’s deinterlacing processor has to notice the motion, as in the difference between the fields, and compensate. Usually it does this by, basically, averaging the difference. In effect, fudging the edges. Yep, that’s right, it’s making stuff up. Or, if you want to argue it differently, it’s manipulating the image so there’s no artifacts, at the expense of absolute resolution. Don’t worry, though, TV processing has gotten really good at this (and usually does a lot more than just “averaging”) so the result is rather seamless.

Now this is where an argument about 1080p — real 1080p — becomes worthwhile. A full 60-frame-per-second 1080p video would be awesome. Not because it’s a higher resolution than 1080i, but because it’s a higher frame rate (and not interlaced), so motion will be more detailed. However, it’s highly unlikely most people would ever see a difference. Compression artifacts in the source or edge enhancement in the display are far more detrimental to the image than deinterlacing. Reducing either of those two factors would have a bigger effect on the image. Check out “When HD isn’t HD” for more info on that. So with full 1080p, the subtle increase in motion detail isn’t likely to be noticed.

And 1080p is even less important for movies.

Movies (and the beauty of 3:2) Movies are, and will be for the foreseeable future, 24 frames per second. Sure, James Cameron, Peter Jackson, and even Roger Ebert want to up the frame rate, but that’s going to be an uphill battle. When it comes down to it, people equate 24fps with the magic of movies and higher frame rates with the real-world reality of video. Changing people’s perception is a lot harder than twisting a dial on a video camera. (Note that I’m not arguing for or against higher frame rates here. Maybe in a future article.)

Nearly every Blu-ray on the market is 1080p/24, or 1,920×1,080 pixels at 24 frames per second. As we’ve discussed, this is actually less than 1080i. Of course, the average Blu-ray is much better-looking than your average 1080i signal (from cable/satellite, etc.). This is most often due to other factors, like compression. Once again, check out When HD isn’t HD.

For that matter, Blu-ray isn’t even capable of 1080p/60. At least, not yet. It maxes out at (wait for it) 1080i! Funny how that works. There are a few ways to get real 1080p/60 video, namely from a PC or by shooting it with a newer camcorder or digital still camera’s video function, but even in those cases you can’t burn the video to a Blu-ray for playback at 1080p/60.

Those of you astute at math will be wondering: how do you display 24fps on a 60fps display? Not well, honestly.

The trick is a 2:3 sequence of frames (colloquially referred to as 3:2, from 3:2 pull-down, the method used).

Imagine film frames A, B, C, D, and E. When shown on a 60Hz TV, they’re arranged in a 2:3 pattern, like this:

AA, BBB, CC, DDD, EE

…and so on. The first film frame is doubled, the second frame is tripled, the third is doubled, and so on. Although this means 60Hz TVs can operate with minimal processing effort, it results in a weird judder due to the bizarre duplication of frames. This is most noticeable during horizontal pans, where the camera seems to jerkily hesitate slightly during the movement.

Ideally, you’d be able to display duplicate frames without the 3:2 sequence. One of the potential advantages of 120 and 240Hz LCDs is the ability to display film content at an even multiple of 24: 5x in the case of 120Hz, and 10x in the case of 240Hz. Sadly, not all 120 or 240Hz TVs have that ability. Some plasmas have the ability, but in many cases, it’s a flickering 48Hz (96Hz is way better, and offered on some high-end models).

As an aside, the Soap Opera Effect is the TV creating frames to insert in-between the real film frames to get up to 120 or 240Hz. This results in an ultra-smooth motion with film content that makes it look like video. People fall into two distinct categories when they see the Soap Opera Effect: Those who hate it and want to vomit, and crazy people (just kidding). For more info on this, check out “What is refresh rate?”

But what about games? As I discussed in my “4K for the PS4? Who cares?” article, most video games aren’t actually the resolution they claim to be on the box (or that’s shown on your TV). Most are rendered (i.e., created) at a lower resolution, and then upconverted to whatever resolution you like. So I guess an argument could be made that these are 1080p, as that’s technically the number of pixels sent by the Xbox/PS3, but I’d argue that the actual resolution is whatever the game is rendered at. Case in point: Gran Turismo 5 is rendered at 1,280×1080 pixels. This is about 50 percent more pixels than 720p, but an equal amount less than “true” 1080p. Upconversion is not true resolution, neither with regular video content, nor with game content.

If you’re a PC gamer, however, you can get 1080p/60. For the most part, PC games render at the same resolution the video card outputs. For more info on using your PC with your TV, check out “How to use your TV as a computer monitor for gaming, videos, and more.”

Bottom line While 1080i and 1080p have the same number of pixels, they do have different frame rates (and one is interlaced). The reality is, other than PC games, there isn’t any commercially available “real” 1080p content. It’s either 1080i content deinterlaced by your TV, 1080p/24 content from Blu-ray, or upconverted content from console games.

That’s not to say it wouldn’t be great if we did have more 1080p/60 sources, but the slightly better motion detail would not be a huge, noticeable difference. In other words, you’re not really missing out on anything with 1080i.

Got a question for Geoff? First, check out all the other articles he’s written on topics like HDMI cables, LED LCD vs. plasma, Active vs Passive 3D, and more. Still have a question? Send him an e-mail! He won’t tell you what TV to buy, but he might use your letter in a future article. You can also send him a message on Twitter @TechWriterGeoff or Google+.