Josh Miller/CNET

It wasn’t all that long ago that a 3.2-megapixel camera on the back of your smartphone was reason to show off. Now, phone-makers like Nokia and Samsung are forging a new frontier of mobile photography aimed at incorporating the technology of a point-and-shoot into the smartphone bundle.

Nokia gets camera savvy with the Nokia Lumia 1020’s 41-megapixel sensor and software that saves huge images for lossless cropping. With the niche Samsung Galaxy S4 Zoom, the electronics giant derails from the streamlined smartphone shape by essentially cramming a cellular radio into a point-and-shoot camera with a 10x optical zooming lens.

These two examples of smartphone cameras that go above and beyond the typical phone’s shape, experience, and processing capability are just the beginning. We’re transitioning into an era of deep convergence that will only further merge the standalone camera into your pocket device. Here’s what’s coming up.

55-megapixel sensors Right now most smartphones have between 5- and 13-megapixel cameras, but did you know your phone might already be capable of housing a 55-megapixel shooter?

The optical glass and image sensors play a huge role, sure, but so do other elements behind the scenes, like image processing chips and the device’s ability to write image files as quickly as the phones can process them.

Josh Miller/CNET

For example, there’s Qualcomm’s Snapdragon 800 processor, which is starting to ship with high-end smartphones, like Korea’s version of the Samsung Galaxy S4, some models of the Sony Xperia Z Ultra, and LG’s “G” series of smartphones.

The 800 integrates two image signal processors (ISPs) into the chipset. That means every smartphone using this processor has the theoretical capability to support images up to 55 megapixels — not that phone-makers can or even want to implement the hardware and software required to make that happen.

We see Nokia tread this path with its 41-megapixel Lumia 1020, and with the Symbian-based 808 PureView before it, but this ultrahigh-megapixel count will be the exception, not the norm.

Smartphones will continue to nudge the size of image files higher, but we’ll also continue to see some systems go the other way, capturing large amounts of detail that resolve into relatively bite-size 4-, 5-, and even 6-megapixel morsels, like the “ultrapixels” found in the HTC One, for easier sharing and uploading.

Now playing:

Watch this:

Best smartphone cameras

4:12

Multiple front and rear cameras In addition to the size of the image file a device can support, the smartphone’s processing chip dictates the number of cameras that phone-makers can assemble on a single handset. The Snapdragon 800’s four-camera support means that future phones could have more than the single front- and rear-facing cameras we’ve come to expect.

Read more Smartphones Unlocked

- Smartphone innovation: Where we’re going next

- 6 things I want from NFC

- What it really takes to make a flexible phone

- How to sell your phone for cash

- Your smartphone’s secret afterlife

- Smartphone batteries: Problems and fixes

- 5 things you didn’t know about data testing

- The ABCs of smartphone screens

- Why more camera megapixels aren’t better

Suppress those 3D camera phone flashback jitters for now. A four-camera layout doesn’t necessarily signal a return of the LG Thrill or HTC Evo 3D with their ahead-of-their-time 3D photos and videos — though that’s certainly also a possibility.

Dual front-facing cameras, for instance, can be used to capture gestures you might use to control the phone’s interface. Paired with audio from multiple microphones, the voice and visual smarts can work together to refine touch-free navigation.

There’s also the element of contextual awareness, which uses a variety of sensory inputs — like the gyroscope, ambient light sensor, and, yes, even the front camera — to respond to a range of gestures and environments.

Multiple cameras, or even an array of lenses within one camera module, can gather much more information about the surrounding world. Face tracking, gaze estimation, and a better mapping of facial outlines and contours can improve things like automated focus and editing based on real facial structures, not just two eyes pointed squarely at the lens.

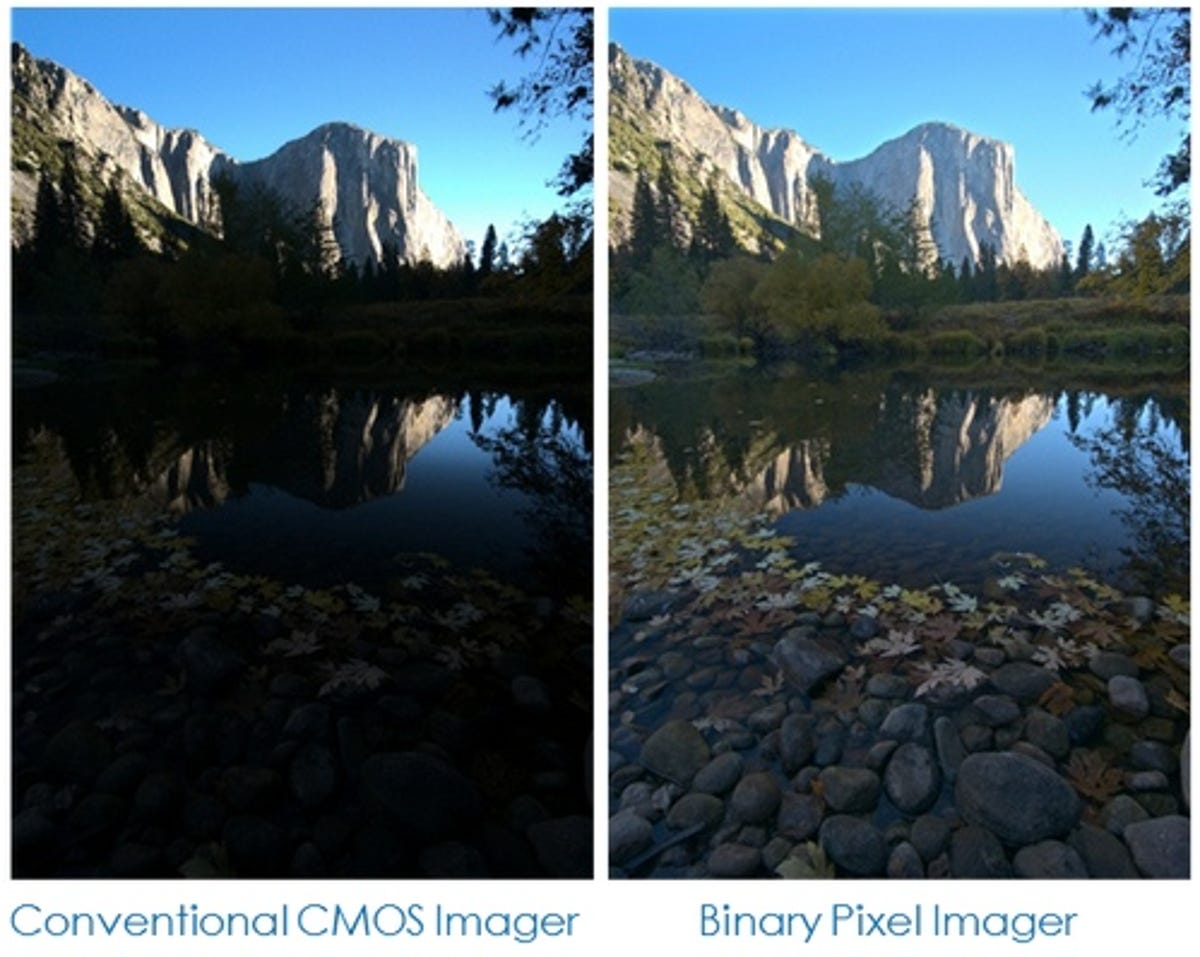

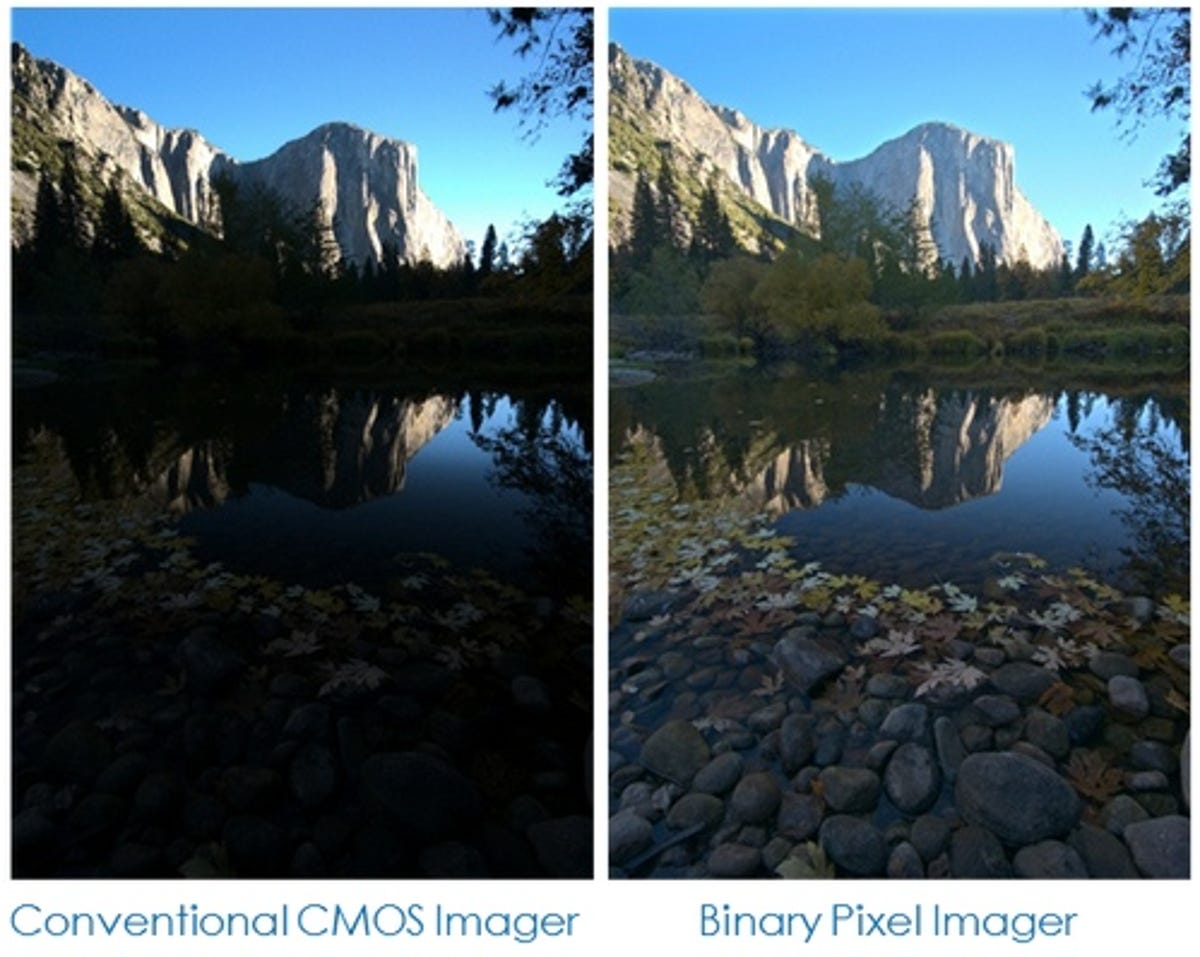

Ultra HDR Smartphone cameras with settings for HDR, or high dynamic range, do a better job evenly capturing the deep blacks, midtones, and highlights of a high-contrast scene than the automatic camera mode.

Now companies (like Rambus) are working on technology that takes HDR one step further. Called Ultra HDR, the next iteration of this process attempts to draw out details you often lose in shadow.

Rambus

Right now HDR is a separate mode you can turn on through the native camera or a third-party app — that goes for all mobile platforms. Today’s HDR feature typically works by combining images taken at three exposure levels into a single picture. The next generation of HDR is built into a CMOS sensor, so it’ll be part of the camera’s automatic settings by default — although the phone-maker can always choose to turn the HDR settings on or off through software. Ultra HDR will also work in real time with a single shot; it isn’t a composite of three different images.

Rambus expects its ultra HDR architecture for camera sensors to ship on phones in the first half of 2015, but competitors are sure to offer other approaches and innovations before then.

Better, crazier effects Smartphones like the HTC One, Samsung Galaxy S4, and LG Optimus G Pro have built-in tools that do everything from stitch together action sequences in a single frame to magically erase unwanted photo-bombers. Nokia’s creative camera software for its Lumia phones does much of the same.

The problem is, these tools can be clunky, and often don’t work well enough to actually be reliable. They also require premeditation, making you think about the scene you want to set up before the big moment, instead of catching something a little more spontaneous. And if the special effect doesn’t work, there aren’t always great ways to abort the effect and save the original image.

Jessica Dolcourt/CNET

Improvements in these early-stage effects are on their way, experts promise, and they’re all part of a larger bucket of image processing known as computational computing. The post-production stitching together of images for panorama, conventional HDR, and auto-adjustments for still and videos all fall into this computational computing category, which is picking up more effects each year.

Creating 360-degree images like those using Android 4.2’s Photo Sphere feature hasn’t caught on yet with the masses, and we can’t yet set the camera focus after the fact a la the imperfect Lytro handheld, but these digital camera carryovers are a few more examples of interesting features that may be more popular later on down the road.

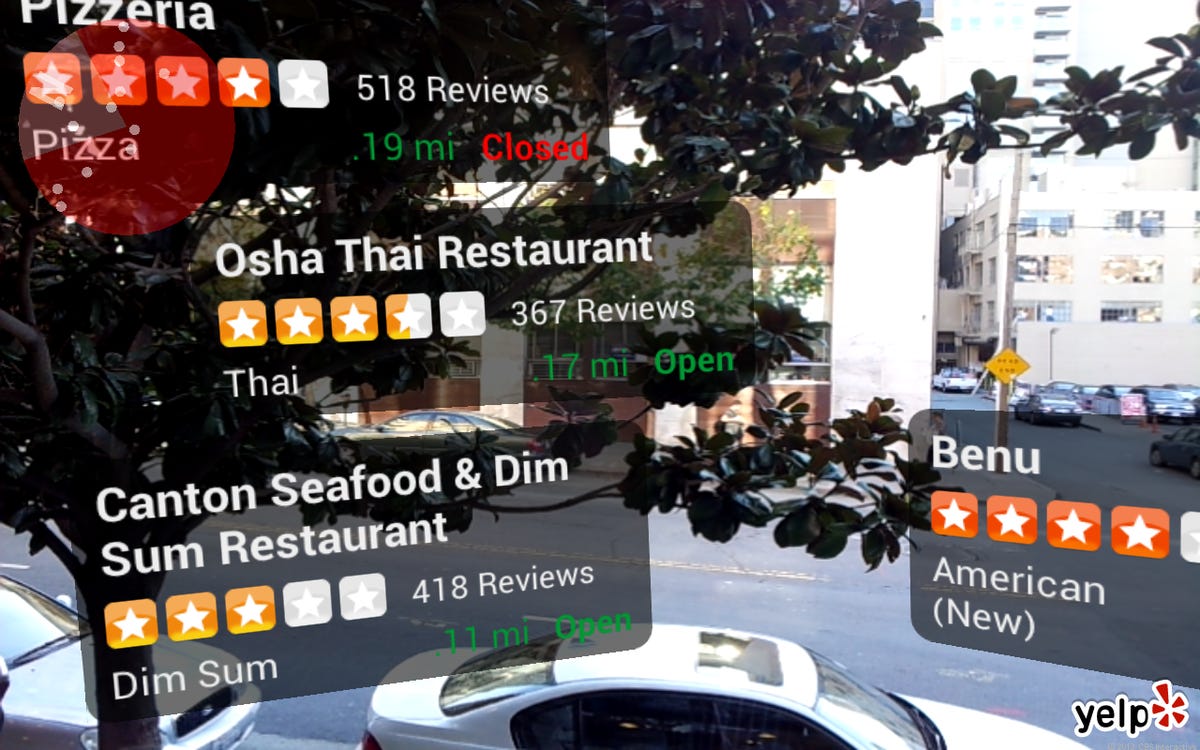

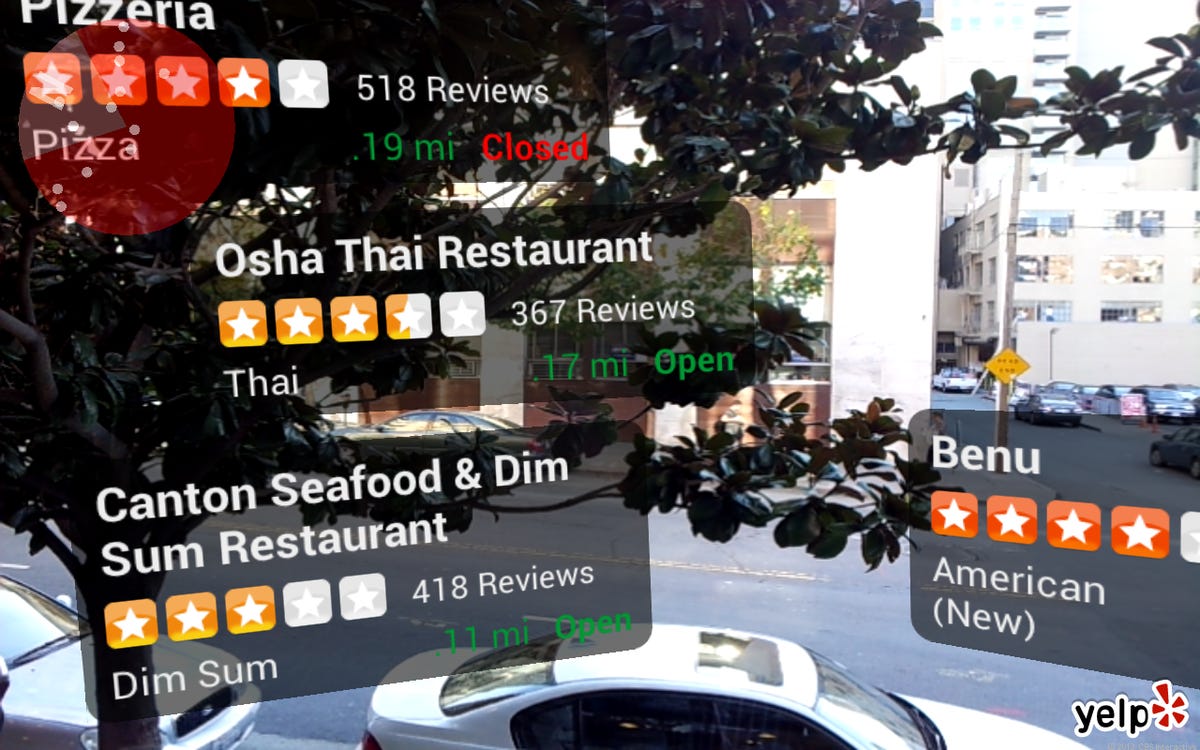

Augmented reality you care about Augmented reality — where outside information or images overlay what you see through your camera’s viewfinder — is one of those things that’s easy to disassociate from the camera.

It’s also a feature that I forget to want to use. Instead of pointing a camera in a direction and hoping my phone will tell me which landmarks or restaurants are nearby or off in the distance (like Yelp’s Monocle app), I usually glue my face to maps for directions and slavishly shuffle along.

Well, there’s room for growth in this area, too. A little more development could pull up touch-free information about a very specific location or an object that’s deeper than what we have today.

Jaymar Cabebe/CNET

Imagine a scenario, says Michael Ching, head of Rambus’ imaging department, in which holding up the phone to a museum painting, an item on a retail shelf, a billboard, or even a direction at an airport or the zoo would pop up more-detailed information about what the object is, or point out exactly which way to go without you typing a thing.

In order for this more nuanced development in augmented reality to blossom, the camera and the software that interprets images both have to become very good at accurately distinguishing objects from one another.

3D shooting with a single lens DSLRs don’t need multiple cameras to shoot in 3D, and in the future, smartphones won’t, either. Since so many features and effects swim downstream from dSLRs and point-and-shoots to smartphones, keep your eye out for this kind of option to make its way onto your phone.

Effects applied to videos and panoramas are other good smartphone candidates for the future.

Smartphone cameras rising

In some cases, smartphone image quality will march along thanks to the trickle-down of features and technology from standalone cameras. Other times, we’ll see developers dream up tools, like enhanced uses for augmented reality, that are specific to the mobile experience.

Either way, it’s phone owners’ demand for better, clearer, more interesting pictures and effects that keeps driving mobile photography forward, and making your handset the most convenient-to-reach camera you could own.

To see the latest and best of what’s available now, check our current list of the best camera phones.

CNET

Smartphones Unlocked is a monthly column that dives deep into the inner workings of your trusty smartphone.