Want a better camera on your Android device? Google does, too.

For that reason, the company has overhauled the mobile OS’s plumbing. Google has built deep into Android support for two higher-end photography features — raw image formats and burst mode — and could expose those features so that programmers could tap into them, the company said.

Evidence of raw and burst-mode photos in the Android source code surfaced earlier in November, but Google has now commented on the technology. Specifically, spokeswoman Gina Scigliano said the support is now present in Android’s hardware abstraction layer (HAL), the part of the operating system that handles communications with a mobile device’s actual hardware.

“Android’s latest camera HAL (hardware abstraction layer) and framework supports raw and burst-mode photography,” Scigliano said. “We will expose a developer API [application programming interface] in a future release to expose more of the HAL functionality.”

An API means that programmers would be able to use the abilities in their own software. Google already uses burst mode on the Nexus 5 smartphone’s HDR+ mode, capturing multiple photos in rapid succession and merging them into a single high-dynamic range photo.

Hardware still matters a lot for a smartphone’s photographic capabilities. But a better software foundation could mean Google’s mobile OS becomes more competitive, especially if programmers choose to tap into the full data that Android makes available.

Image-processing pipeline

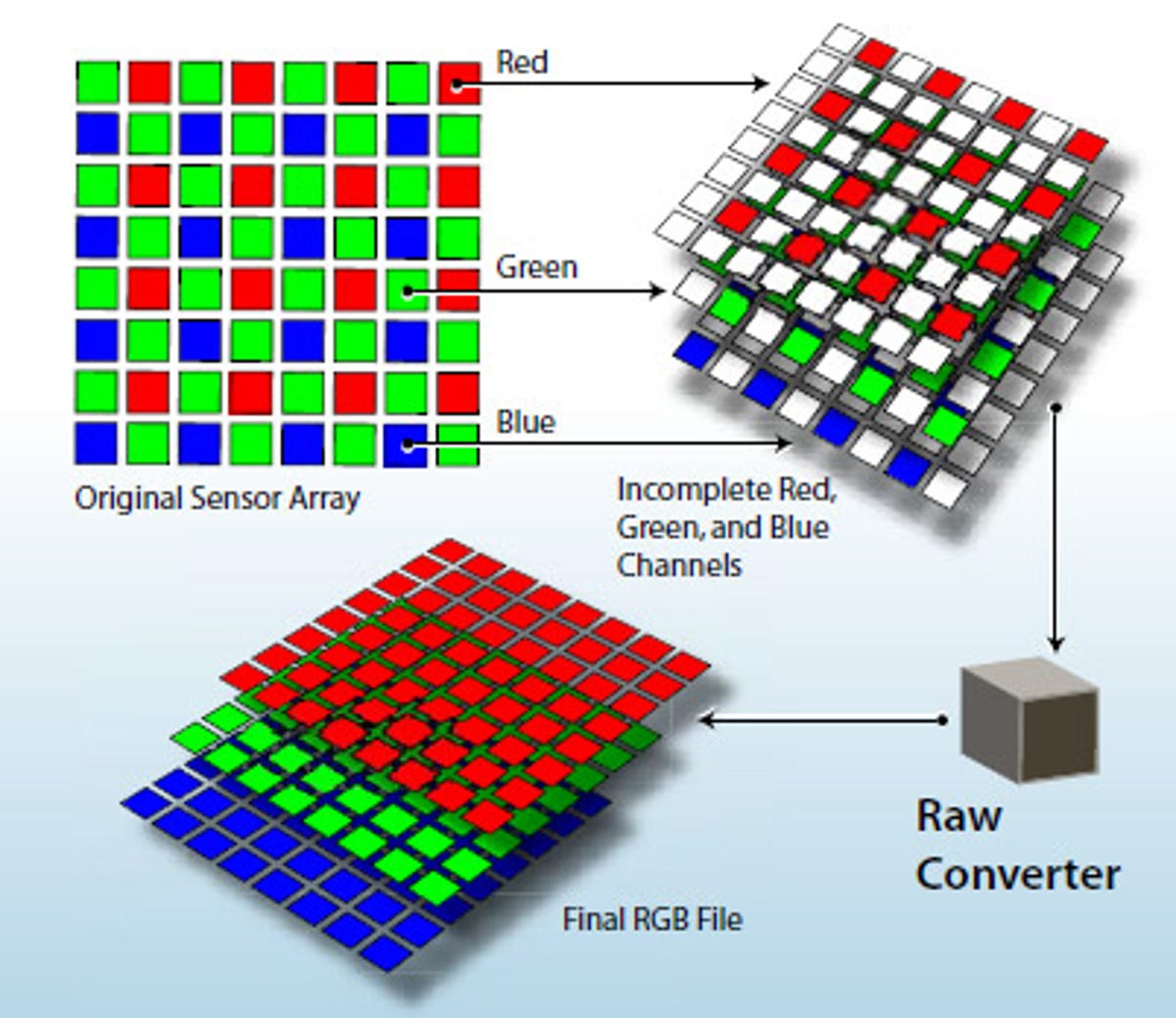

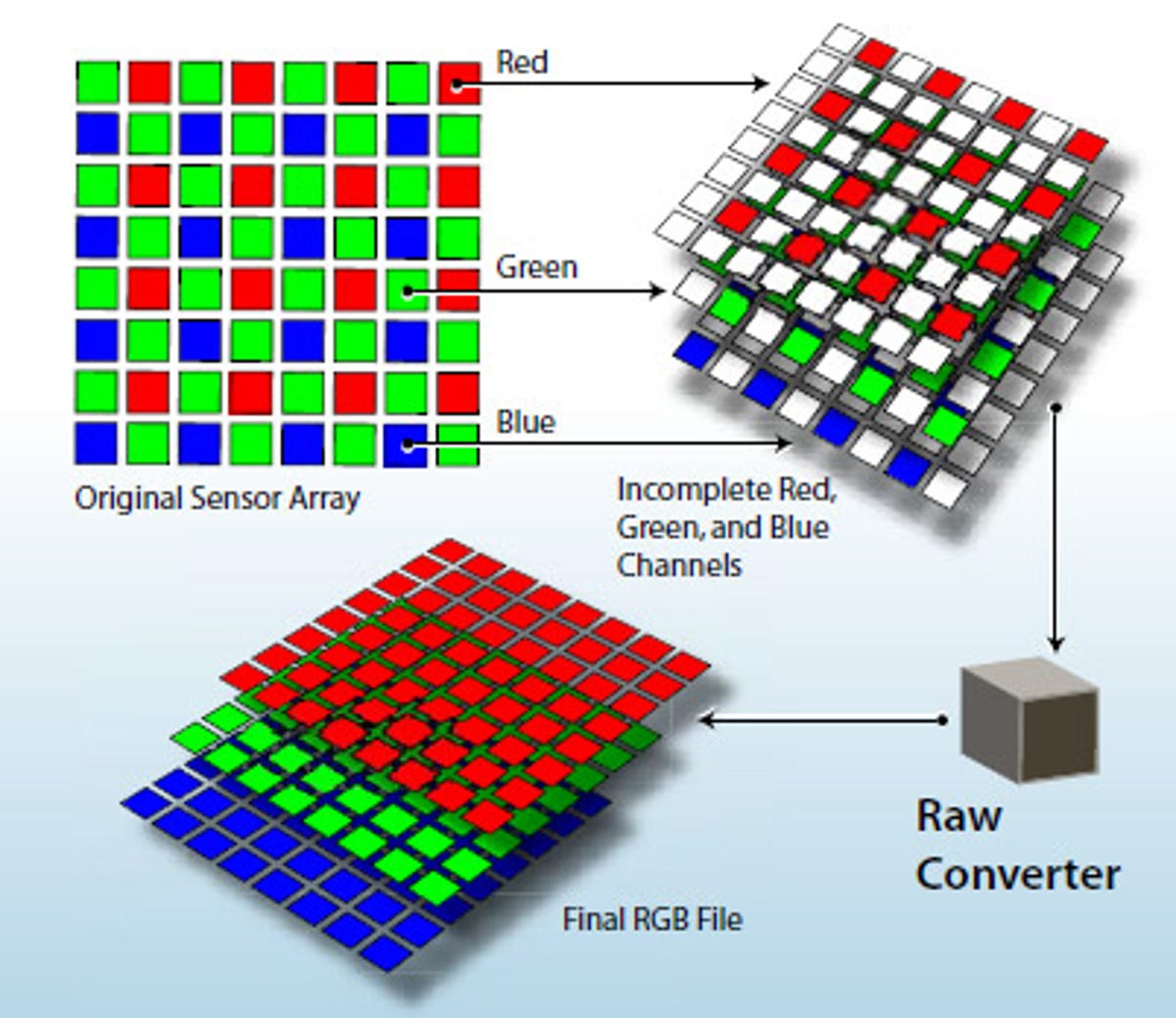

In modern digital photography, a camera consists not just of the lens and image sensor but also image-processing hardware and software that’s used to generate a photo. That includes options for features such as noise reduction, the elimination of unpleasant color casts, correction of lens problems, and combination of multiple photos into a single shot with a better balance of bright and dim areas.

All that image processing points to big changes in store for Android-based photography. The more advanced hardware abstraction layer means that Google will let programmers write more sophisticated camera apps that make more of the underlying data.

Such processing begins with the raw image-sensor data and typically produces something more convenient like a JPEG-format photo. Many photography enthusiasts, however, prefer to make the image-processing decisions themselves with software such as Apple Aperture or Adobe Lightroom, and Google’s approach could make that more feasible. Raw photography on mobile phones is arriving with Nokia’s Lumia 1520 and 1020, two Windows Phones models.

Raw photos, typically an option only on higher-end cameras, offer more flexibility and image quality than JPEGs, but they also require manual processing that makes them inconvenient. For photo enthusiasts, the hassle is often worth it. Nokia chose to package its raw data in Adobe’s openly documented DNG format, a move that makes it easier for those writing image-processing software. Scigliano wouldn’t comment on Google’s file-format plans for raw data.

Google also isn’t willing to commit to a schedule for delivering its new API so that third-party software will be able to use the new Android camera foundation. The company said only that it’ll ship “in a future release.”

Adobe Systems

Burst-mode basis

Google’s plans for a more advanced camera API should allow more sophisticated photos once it’s exposed so that apps besides Google’s built-in Nexus 5 camera app can take advantage of it.

“The core concept of the new HAL and future API is centered around burst-mode photography,” Scigliano said. “The basic idea is instead of taking a single shot with a given set of parameters, you instead have the power to queue up a request to take multiple shots each with different parameter settings such as exposure gain. The camera subsystem captures a the burst of shots, which can be subsequently post-processed by the application layer.”

Google’s description of Android’s new mechanism for handling camera hardware goes into more detail.

The first Android camera hardware interface “was designed as a black box with high-level controls,” not something that exposed much detail to apps. Some expanded capabilities arrived with version 2, which was in Android 4.2 Jelly Bean, but it wasn’t set up for detailed app control, either.

The bigger changes came with version 3.0 and 3.1, which are designed to “substantially increase the ability of applications to control the camera subsystem on Android devices,” the documentation states. “The additional control makes it easier to build high-quality camera applications on Android devices that can operate reliably across multiple products while still using device-specific algorithms whenever possible to maximize quality and performance.”

For example, the new camera subsystem “results in better user control for focus and exposure and more post-processing, such as noise reduction, contrast, and sharpening,” and makes it easier for programmers to tap into a camera’s various functions.

Better Nexus 5?

The fact that digital photography is so dependent on processing of the raw image data means that Nexus 5 owners also could benefit from Google changes. That’s because a software upgrade can improve the performance of a camera that’s left reviewers unimpressed.

There are limits, of course. The performance of the lens and sensor have a lot to do with the quality of a photo. But Google is optimistic that software improvements will help the Nexus 5.

“The team is aware of the issues and is working on a software update that will be available shortly,” Scigliano said.